What I've learned building AI agents for financial research (and why tools matter)

July 2025•Marko Kangrga, AI Systems Architect

Lessons from the trenches of building Bigdata.com's research agent

There's something almost magical about watching a well-designed research agent work. Query after query, it methodically searches, synthesizes, and delivers insights that would have taken a human analyst hours to compile. But behind this magic lies a deceptively complex challenge: tools.

Over the past year and change of building Bigdata.com's AI research agents, I've discovered that tools are both your greatest superpower and your most dangerous liability. They're the difference between an agent that can actually do things and one that just talks about doing them. But as anyone who's tried to give an LLM access to "all the tools" can tell you, more tools doesn't necessarily mean better results.

The tool paradox

Here's the thing about tools that doesn't get talked about enough: they're interfaces. And just like human-computer interfaces, agent-tool interfaces can be brilliantly intuitive or catastrophically confusing.

I was reminded of this recently when Cursor IDE started showing warnings if users enable too many MCP (Model Context Protocol) tools at once.

The message was polite but clear: "Hey, maybe don't give your agent access to literally everything." Why? Because tool sprawl is real, and it's becoming the new version of database sprawl from the early 2000s.

According to a recent CIO article, over the last year, vendors have been rushing to add agentic AI products to their offerings, creating what experts are calling "agent sprawl" that enables agents to operate in more areas but brings increased complexity, security concerns, and can hurt ROI.

The fundamental issue is this: when an agent has access to 47 different tools, each with varying quality documentation and overlapping capabilities, it spends more time figuring out which tool to use than actually solving your problem. It's like being lost in a hardware store where every aisle looks promising but nothing is quite right.

Financial research: Where tools actually matter

This tool challenge becomes particularly acute in financial research, where the stakes are high and the data sources are vast. Traditional financial platforms like Bloomberg Terminal have solved this by being opinionated – they give you everything, but through carefully curated interfaces refined over decades.

Bloomberg offers comprehensive market coverage across all asset classes with their (genuinely awesome) $32,000/year terminals. But here's where it gets interesting: while Bloomberg offers comprehensive data, modern AI research agents can be selectively comprehensive. Instead of giving users access to everything, you can give them access to everything relevant.

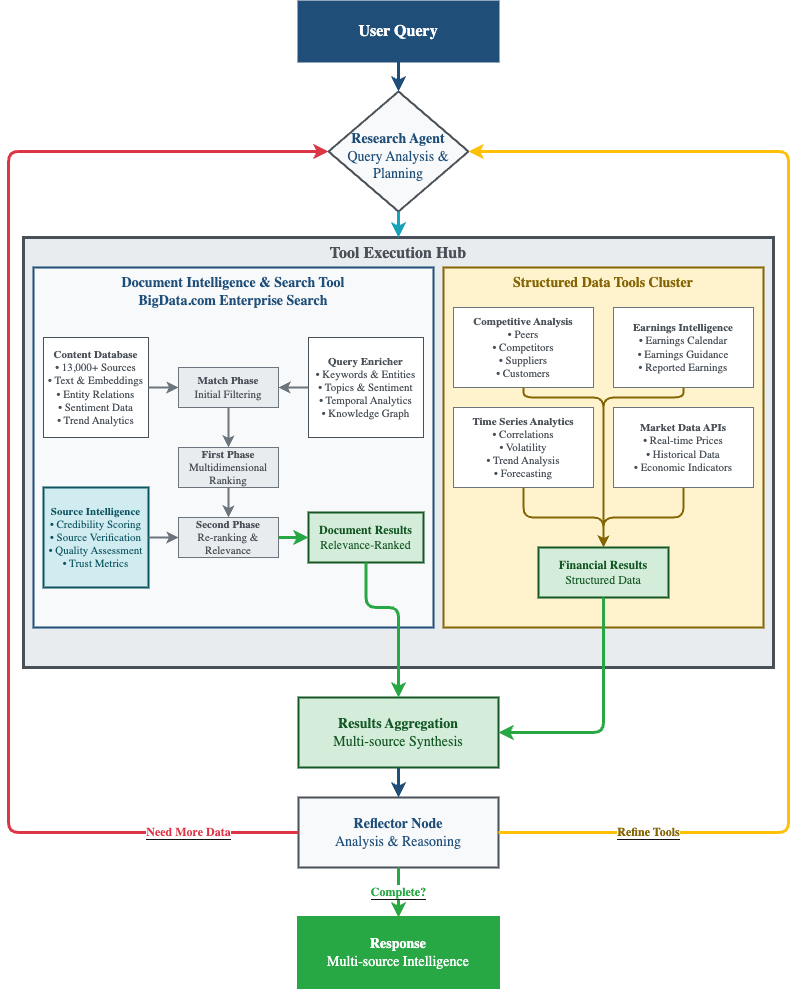

This is exactly what we've been building at Bigdata.com – an AI research agent that combines the depth of premium financial data with the intelligence to know what matters for your specific question.

The art of selective intelligence

Building a financial research agent that actually works requires making three crucial decisions about tools:

1. Curation over comprehensiveness

Not all data sources are created equal. While web search might give you breadth, specialized financial data sources give you depth and reliability. RavenPack's ecosystem (which powers Bigdata.com) comprises key high-quality content sources: premium news, press wires, call transcripts, regulatory filings, expert network content, etc. – with sophisticated filtering capabilities that let you focus on exactly what matters. Case in point, using Bigdata Python SDK:

... this isn't just convenient – it's the difference between finding signal in the world of LLMs and drowning in noise.

2. Real-time over historical

Financial markets move fast. Yesterday's analysis is often today's outdated assumption. Real-time data streaming ensures that agents always operate on the latest information, which is critical when market conditions can change between the time your agent starts its research and when it finishes.

For example, when analyzing semiconductor supply chain risks, you need access to this morning's earnings call transcript, not last quarter's static filing. At Bigdata.com, our agents can access breaking news and live market data to ensure insights reflect the current state of the world when decisions are being made.

3. Entity intelligence over keyword matching

Here's where things get sophisticated. Most financial research tools still rely on keyword matching and basic search. But RavenPack's entity and event detection (integrated into Bigdata.com) means your agent can understand that when you ask about "Microsoft's recent acquisitions," it knows to look for Entity(MICROSOFT) AND Topic ("business,mergers-acquisitions,announcement") rather than just searching for the words "Microsoft" and "acquisitions."

This kind of semantic understanding transforms how agents interact with financial data, moving from pattern matching to actual comprehension.

The LangGraph advantage

What makes modern research agents particularly powerful is their architecture. LangGraph provides a very low level, controllable agentic framework with no hidden prompts, no obfuscated cognitive architecture. This transparency matters enormously when building financial tools where you need to understand not just what your agent found, buthowit found it.

The ReAct (Reason + Action) pattern that LangGraph enables naturally fits financial research workflows:

1.Think: "I need to understand Tesla's supply chain risks"

2. Act: Search transcripts for supply chain mentions

3. Observe: Find relevant discussions from Q3 earnings calls

4. Think: "This mentions chip shortages, let me search for broader semiconductor industry trends"

5. Act: Query entity-based search for semiconductor supply chain news

6. Observe: Synthesize findings into coherent analysis

Multi-agent systems work mainly because they help spend enough tokens to solve the problem, with three factors explaining 95% of the performance variance in research evaluations: thinking time, tool quality, and coordination.

The human element

Perhaps the most important lesson from building research agents is thattools need descriptions as carefully crafted as the code that implements them. Bad tool descriptions can send agents down completely wrong paths, so each tool needs a distinct purpose and a clear description.

We've found that having agents test and improve their own tool descriptions creates a virtuous cycle. A tool-testing agent, when given a flawed MCP tool, attempts to use the tool and then rewrites the tool description to avoid failures, resulting in a 40% decrease in task completion time for future agents.

This isn't just engineering—it's applied epistemology. How do you describe complex financial operations in a way that an AI can understand and use correctly? At Bigdata.com, we're constantly refining our tool descriptions based on real usage patterns, creating a feedback loop that makes our agents more reliable over time.

Looking forward

The future of financial research agents isn't about having more tools - it's about having better tools that work together intelligently. Agent-tool interfaces are as critical as human-computer interfaces, and we're still in the early days of figuring out what good agent UX looks like.

The companies that get this right will build agents that feel less like sophisticated search engines and more like research partners - systems that don't just find information, but understand what you're really trying to figure out.

And maybe, just maybe, they'll help us avoid the tool sprawl that's already starting to plague the agent ecosystem. Because the goal isn't to build agents that can use every tool - it's to build agents that know which tools to use, when to use them, and how to use them well.

This is just the beginning of my journey building AI research agents. As the space evolves rapidly with new frameworks, tools, and approaches emerging regularly, I'll continue sharing insights and lessons learned from the trenches. The future of AI-powered financial research depends on getting these fundamentals right.